Pega is really focused on delivering enterprise wide solutions. An enterprise typically has a complex organization structure, sells more than one product or product type, has offices or branches in more than one location, sells products through various channels to several groups. One could say that the only stable factor in an enterprise is change itself:-)

Pega handles complexity really well, internally following the structure of the organisation, the products it delivers and the markets it serves. The software system which is build with Pega to support business processes, typically stays close to the organization structure of the enterprise; knowing that management at organizational, divisional and unit level all “need” their own influence on the software support of the business processes they own: Certain regulations like human resource management may be maintained at an organization level. Much functionality is likely to differ on divisional and unit level.

To allow this to work Pega breaks down functionality to the level of what they call “a rule”. Several rule types exist like flow rules to create process flows, user interface rules to create user interfaces or decision rules to store business rules. Rules can be defined at one layer and specialized on another as such allowing differences between division and unit level and in the meantime to allow compliance to certain enterprise rules defined at organizational level.

Developers will use the so called “Enterprise Class Structure” (ECS) together with “Custom Build Frameworks” (CBF) and standard Pega frameworks to obtain optimal reuse and speed of development, see the example below:

A typical application uses rules that are defined on the unit level and that inherit rules from the division and enterprise layer(s) below. Rules from framework layers can be injected using multiple inheritance paths. As such developers can create an application handling car insurance claims using the Pega CRM framework, augmented with car insurance specifics defined in the custom build framework. But in the meantime complying to the reporting requirements by using the business rules defined at divisional and enterprise level.

Development and deployment is supported by rule-sets

To allow for easy deployment, rules can be grouped in rule sets. An application typically comprises several rule-sets. Rules that make up a Custom Build Framework for instance could be stored in several rule-sets allowing for easy deployment. Rule-sets follow the structure ECS and although rules for a unit could reside in one rule-set, often different aspects of the rules in the set need to be released independently of each other. Rule sets are version-ed and versions can reside next to each other. Specific rules in a rule set may be withdrawn or blocked to allow to patch functionality and create quick fixes. Rules can be set final to make sure that the rule can not be specialized any further.Rule Resolution makes it work

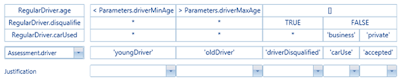

In order for this system to work and to allow for optimal reuse, Pega comes with a advanced rule resolution scheme. All rules are stored in a database under a rule name, referring to a class and a rule type. At run-time the system determines what is the most appropriate rule to run considering the operator, the operators authorizations and access to frameworks, place in the organization and so on. Besides that, the system determines the most appropriate rule on more technical aspects like the class name, circumstance values (parameters provided) and validity of the rule. And finally rules can be blocked and withdrawn to cater deployment, release schedules and quick fixes.Pega offers an unparalleled system for reuse. It truly keeps it’s promise to deliver a system for enterprise wide software delivery... in my opinion Pega Rocks!